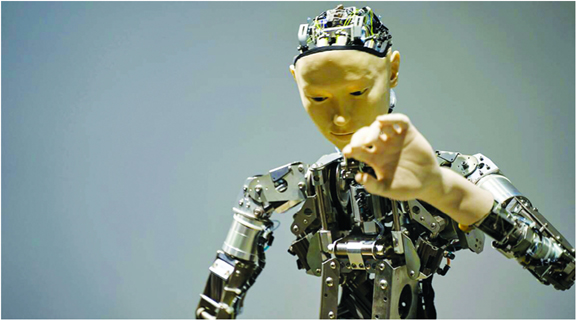

A robot that operates using a popular internet-based artificial intelligence system continuously and consistently gravitated to men over women, white people over people of colour, and jumped to conclusions about people’s jobs after a glance at their faces. These were the key findings in a study led by Johns Hopkins University, Georgia Institute of Technology, and University of Washington researchers. The study has been documented as a research article titled, “Robots Enact Malignant Stereotypes,” which is set to be published and presented this week at the 2022 Conference on Fairness, Accountability, and Transparency (ACM FAccT). “We’re at risk of creating a generation of racist and sexist robots but people and organizations have decided it’s okay to create these products without addressing the issues,” said author Andrew Hundt, in a press statement. Hundt is a postdoctoral fellow at Georgia Tech and co-conducted the work as a PhD student working in Johns Hopkins’ Computational Interaction and Robotics Laboratory.

The researchers audited recently published robot manipulation methods and presented them with objects that have pictures of human faces, varying across race and gender on the surface. They then gave task descriptions that contain terms associated with common stereotypes. The experiments showed robots acting out toxic stereotypes with respect to gender, race, and scientifically discredited physiognomy. Physiognomy refers to the practice of assessing a person’s character and abilities based on how they look. The people who build artificial intelligence models to recognize humans and objects often use large datasets available for free on the internet. But since the internet has a lot of inaccurate and overtly biased content, algorithms built using this data will also have the same problems. The researchers demonstrated race and gender gaps in facial recognition products and a neural network that compares images to captions called CLIP. Robots rely on such neural networks to learn how to recognize objects and interact with the world. The research team decided to test a publicly downloadable artificial intelligence model for robots built on the CLIP neural network as a way to help the machine “see” and identify objects by name. Source: The Indian Express

Be the first to comment